Incrementally download large amounts of data from Hugging Face

Recently the Emilia dataset released 114,000 hours of speech data YODAS. I had previously downloaded 100,000 hours of data, but because I didn’t keep the original repository after processing, I couldn’t directly git pull to update.

Before starting the download, first verify that there is enough storage space—for example, the 114,000‑hour YODAS requires about 2 TB of storage. This dataset is hosted on Hugging Face and you can specify a subdirectory to download using a Python function:

from huggingface_hub import snapshot_download

snapshot_download(

repo_id="amphion/Emilia-Dataset",

repo_type="dataset",

allow_patterns=["Emilia-YODAS/**"],

local_dir="./Emilia-YODAS",

tqdm_class=None

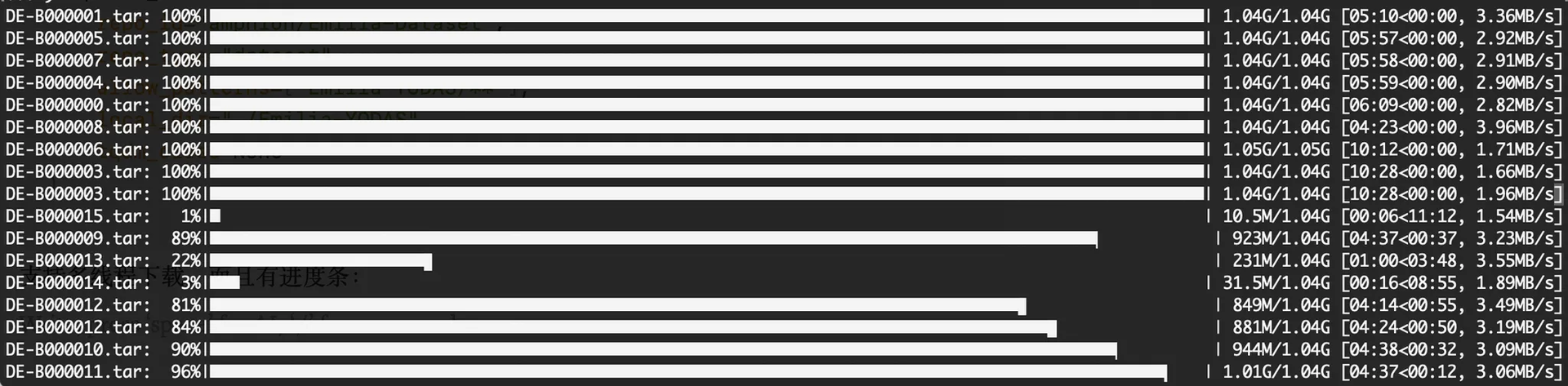

)It supports multi‑threaded downloading and includes a progress bar:

If the download is interrupted, running the command again will automatically skip the files that have already been downloaded, which is very convenient. This method also works for other large datasets hosted on Hugging Face, allowing you to download only the specific subdirectories you need.